Zero-shot and few-shot learning are two very interesting concepts under the banner of machine learning, which attempted to overcome the shortcomings of traditional learning methods requiring huge labeled data. These approaches enable models to generalize from limited data and handle tasks with little or no prior training examples. Let’s dive into the details of these methods and explore some illustrative examples.

Zero-Shot Learning

Zero-shot learning is the capacity of a machine learning model to classify objects it has never seen. This is accomplished by making use of external knowledge and relationships from known to unknown classes. The main idea is transferring knowledge from seen classes to unseen classes, so the model can predict without examples.

How Zero-Shot Learning works:

It works in a way where zero-shot learning is founded upon semantic representations such as attribute vectors or word embeddings for both seen and unseen classes. Such representations of relationships among various classes enable the model to transfer between known classes to unknown classes. There are two broad approaches to zero-shot learning: attribute-based and embedding-based.

- Attribute-Based Approach: In this approach, each class is described by a set of attributes or features. For example, animals can be described by attributes like “has wings,” “can fly,” “is furry,” etc. During training, the model learns to associate these attributes with the seen classes. During inference, the model uses the attributes to classify unseen classes based on their semantic descriptions.

- Embedding-Based Approach: Here, in the case of an embedding-based approach, the semantic meaning of a class is captured using word embeddings or other vector representations. This means that the model learns the mapping between images or any data points to these embeddings. This means that during inference, it can definitely identify unseen classes based on their matching with the embeddings of the known classes.

Example: Suppose that a model has learned to recognize the different animals in the world, such as dogs, cats, and birds. Now suppose that we wish for it to learn about a new animal, a zebra, with no examples labeled as such. Zero-shot learning enables us to use the attributes about zebras, such as “has stripes,” “is a mammal,” “lives in Africa,” or even the word embedding of “zebra” to allow the model to recognize images as zebras.

Few-Shot Learning

Few-shot learning (FSL) aims to enable models to learn new tasks with only a few labeled examples. This is particularly useful in situations where it is impossible or too expensive to collect large amounts of labeled data. FSL focuses on rapid adaptation and generalization from a small number of examples.

How Few-Shot Learning works:

Few-shot learning generally involves meta-learning, where a model is trained on a wide variety of tasks to develop the ability to learn new tasks very quickly with little data. There are three approaches to few-shot learning, namely metric-based, optimization-based, and memory-based methods.

- Metric-Based Approach: This approach learns a similarity or distance metric that can be used to compare new examples with a few labeled examples. One of the popular methods is the Prototypical Network, which computes the prototype (mean embedding) of each class based on the few labeled examples and classifies new examples based on their distance to these prototypes.

- Optimization-Based Approach. In this, the model has to adapt extremely fast to novel tasks with few gradient updates. Model-agnostic meta-learning, or MAML, is another very popular technique to fine-tune which learns the model to discover a good initialization of its parameters to be quickly tuned on new tasks with minimal amount of data.

- This method depends on the memory-augmented neural network, which remembers the details of previous tasks to apply it to learning new ones. Examples of architectures using the concept of an external memory in few-shot learning are NTM and DNC.

Example: Let’s assume we train a model that has been asked to classify the type of flower – rose, daisy, and tulip. Now we want our model to identify the type of new flower which could be an orchid but the availability of training samples of labeled examples is too limited. Therefore, with the help of meta-learning, it would allow our model to rapidly learn this new task by providing limited examples, hence, correct classification of an orchid could be possible.

Applications of Zero-Shot and Few-Shot Learning

- NLP: The most common use of zero-shot and few-shot learning in NLP is in text classification, sentiment analysis, and machine translation. For example, a zero-shot learning model might classify text into new categories by using their semantic representations, while a few-shot learning model could rapidly adapt to new languages or dialects with very few examples.

- Computer Vision: Zero-shot and few-shot learning applies computer vision to object detection, image classification, or other facial recognition-based tasks. They can identify entirely new objects or faces with virtually no labeled data, thus fitting models to application areas that entail security, healthcare, or autonomy in driving capabilities.

- Robotics: zero-shot and few-shot learning can be effectively used in applying robots to novel environments because of minimal human interactive effort. Such as, a robot can learn new movements and can explore new spaces with only a few demonstrations or instructions.

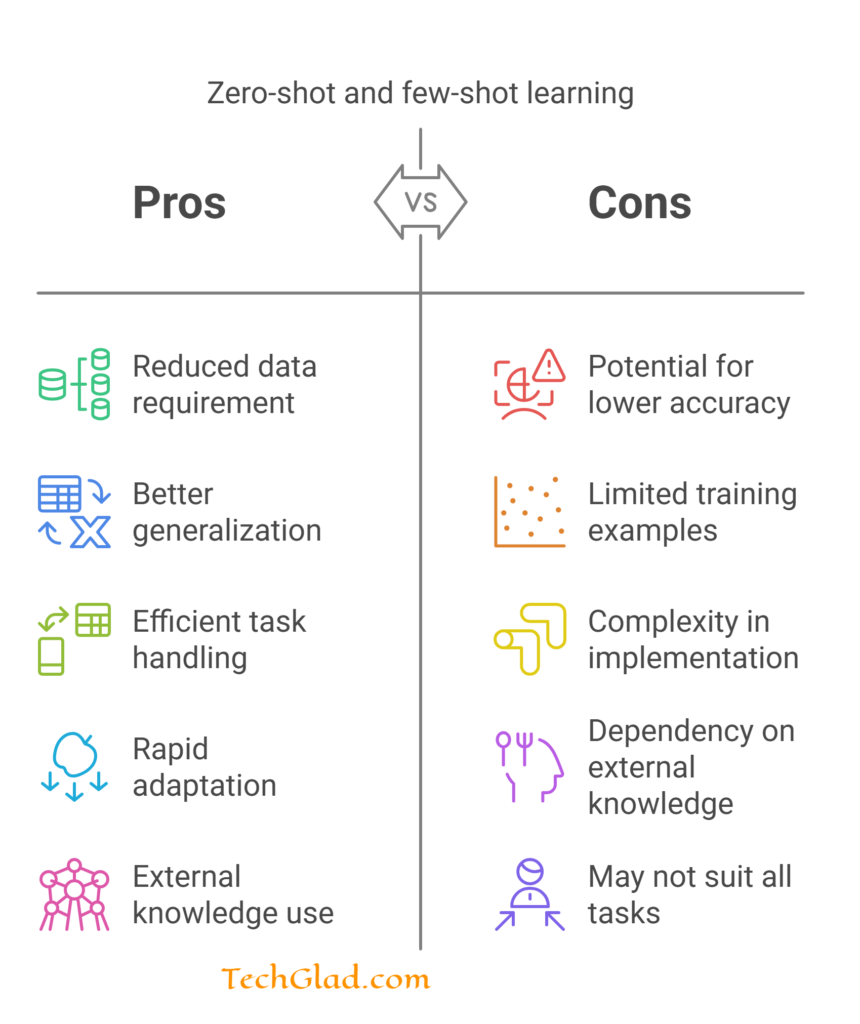

Advantages and Challenges of Zero-Shot and Few-Shot Learning

Advantages:

- Data Efficiency: Zero-shot and few-shot learning reduce the need for large amounts of labeled data, making it easier and more cost-effective to train models.

- Rapid Adaptation: These techniques enable the models to adapt rapidly to new tasks and environments, hence making them more versatile and applicable.

- Knowledge Transfer: External knowledge and relationships between classes enhance the generalization ability of models in zero-shot and few-shot learning.

Challenges of Zero-Shot and Few-Shot Learning:

- Model Complexity: Zero-shot and few-shot learning methods are quite complex to implement. They demand architectures and training techniques that are pretty sophisticated.

- Performance: Even if the presented techniques appear to perform promisingly, they may well lag behind the classical technique based on the availability of large amounts of labeled data.

- Data Representation: The quality of semantic representations and embeddings greatly improves the performance of the zero-shot and few-shot learning models.

Conclusion

Zero-shot and few-shot learning represent tremendous milestones in machine learning as these alleviate some limitations encountered in the earlier approaches with requirements of copious labeled data for learning. These approaches allow models to generalize from limited data while handling new tasks efficiently by harnessing external knowledge and building rapid adaptation capabilities.

Thus, as the research moves ahead, zero-shot and few-shot learning will become really crucial in developing flexible and efficient AI systems for several applications. The ability to learn from few or no examples will drive innovation and expand the potential of machine learning technologies whether in natural language processing, computer vision, or robotics.