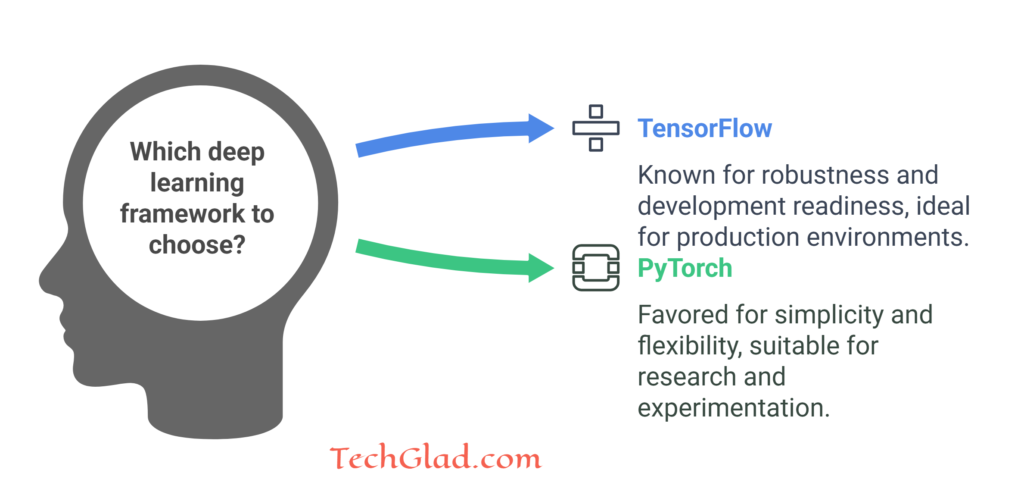

TensorFlow and PyTorch are significant frameworks for deep learning. TensorFlow is developed by Google Brain, known for great robustness and development readiness. PyTorch is developed by the AI Research lab of Facebook. The simplicity and flexibility of this framework have been the favorite of researchers and developers. Choosing one over the other necessitates really careful consideration of all these aspects, be it the features, performance, community support, or deployment capabilities.

Usability and Learning Curve

TensorFlow was initially very steep to learn, but with TensorFlow 2.0 and the integration of the Keras API, it has become much more user-friendly. PyTorch is known for its intuitive and Pythonic syntax, making it easier to learn, especially for beginners. Its dynamic computational graph allows for on-the-fly modifications, which is ideal for research and experimentation.

Tensor-flow in its initial stages was rather wordy and hard to understand. It was very unappealing to new students, who, being sometimes discouraged and having to face a steep learning curve before gaining a basic understanding of the system, were further discouraged.

On the other side, PyTorch has been constantly praised for its ease of use and more intuitive syntax. Designed with a pythonic approach, PyTorch mirrors Python’s natural and logical coding style, which lowers the barrier to entry. Its dynamic computational graph enables users to modify the graph on the fly, making it perfect for rapid experimentation and iterative research. This degree of flexibility may be very beneficial for researchers, who are often constantly testing and tweaking models with little overhead.

Flexibility

TensorFlow provides both static as well as dynamic computational graphs. Static graphs help in optimization and deploying efficiently and therefore are helpful when used in a production environment. PyTorch is more flexible with dynamic computation graphs, appealing for researchers to build and debug different architectures without major overheads.

TensorFlow flexibility really comes in because it can both support static and dynamic computational graphs. It will be highly suitable for many types of applications as it is the only system supporting both static and dynamic graphs. What comes in handy is a static graph, which also can be named a computation graph, when used in production. Once it is defined, this graph will be optimized and fine-tuned to deliver the best performance.

PyTorch is famous for its inherent flexibility due to the dynamic computation graph. It is much more interactive and exploratory to build and debug models with it. Researchers and developers can define and modify the graph structure on the fly, thus supporting more experimental and adaptive workflows. This is very useful in research settings where novel model architectures and approaches are constantly tested and iterated.

Performance

TensorFlow optimizes high-performance computing for multi-core and multi-GPU computing. Additionally, the platform supports many optimization techniques including distributed computing making it appropriate for production-scale usage.

PyTorch is similarly efficient and production-friendly, enhancing TorchScript as an appropriate package for research, development, or even production, where it holds strong ground on TensorFlow, whose capability of performance can be optimized in highly computational environments. It can scale beautifully across multiple CPUs and GPUs. It can train and deploy large-scale models.

There are also considerable performance aspects witnessed on the part of PyTorch. Though initially focused more on acting as a tool for research prototyping, today PyTorch does pretty well for most of the production use cases.

With TorchScript added on, serialization is now possible in the case of PyTorch models, leading to optimization which would mean smooth deployment of these models even within the production scenarios. That alone puts PyTorch in one of the leads either for researching or for practically deploying scenarios.

Community and Ecosystem

TensorFlow has a more expansive community and ecosystem that provides tools such as TensorFlow Lite, TensorFlow Serving, and TensorFlow Extended (TFX). PyTorch has a rapidly growing community and an expanding ecosystem and tools such as PyTorch Hub and PyTorch Lightning.

Models developed using TensorFlow can be shared in the ONNX format with models developed using PyTorch. Community and ecosystem are one of the strengths of TensorFlow. With a bigger community, TensorFlow provides more support through forums, tutorials, and documentation.

The ecosystem is equally strong, providing tools such as TensorFlow Lite for mobile and embedded devices, TensorFlow Serving for model deployment, and TensorFlow Extended (TFX) for end-to-end machine learning pipelines. This all-in-one toolkit makes TensorFlow a versatile selection for all parts of the workflow of machine learning.

Although PyTorch is younger, the community has fast grown to be active and supportive. Its ecosystem is exploding with great value tools like the PyTorch Hub, a repository of pre-trained models and PyTorch Lightning, making model building easier and training simplified. ONNX (Open Neural Network Exchange) format is offered for interoperability between different frameworks, including deep learning, among which are TensorFlow and PyTorch. Thus, this cooperation aspect makes PyTorch even more flexible and handy in different scenarios.

Visualization and Debugging

TensorFlow provides TensorBoard, a powerful visualization tool for tracking and debugging experiments. PyTorch supports third-party visualization libraries such as Visdom and TensorBoardX. TensorFlow’s TensorBoard is a powerful tool for visualizing and debugging machine learning experiments.

TensorBoard provides interactive visualizations, such as scalars, histograms, and graphs, to monitor model performance and diagnose issues. This level of insight is invaluable for developers seeking to understand and improve their models.

There is no built-in tool from PyTorch as such but still, third-party visualization libraries such as Visdom and TensorBoardX are supported by PyTorch. This allows the libraries to be used in tracking training progress and visually similar to TensorBoard. This means that users of the PyTorch system have robust visualization and debugging capabilities but through external tools.

Conclusion

In conclusion, if you are concerned about ease of use, flexibility, and dynamic computation graphs, PyTorch might be a better choice, especially for research and experimentation. However, if you need a robust and production-ready framework with extensive deployment capabilities, TensorFlow could be more suitable. TensorFlow’s comprehensive ecosystem and tools make it ideal for large-scale machine-learning solutions.

Ultimately, both TensorFlow and PyTorch are powerful frameworks with their unique strengths. The best approach would be to experiment with both and determine which one aligns better with your specific goals and requirements. Both frameworks, TensorFlow and PyTorch, offer excellent support for developing and deploying cutting-edge machine learning models.

TensorFlow and PyTorch offer distinct advantages and cater to different needs. It’s more flexible and research-friendly for using PyTorch, but when it comes to large-scale production environments, TensorFlow is a good choice because it has a solid ecosystem and proper deployment capabilities.

You can opt for either framework based on what best suits the strengths and weaknesses of your project’s goals and requirements. Whether you prefer PyTorch for its dynamic graph capabilities or TensorFlow for its rich set of tools, both frameworks offer strong support for the development and deployment of complex machine learning models.