Apache Spark is perhaps the most powerful and versatile tool to have entered the data scientist’s toolkit. This tool has great speed in processing large datasets while being relatively easy to use. Hence, for any beginner as well as for an experienced data scientist, Apache Spark is one of the essential tools. We’ll explore, in this beginner’s guide, what it is that constitutes the foundations of Apache Spark, how it works, and what you could use it for when working with your data science projects.

What is Apache Spark?

Apache Spark is an open-source and in-memory, fast big data processing engine that uses distributed computing for large-scale data. It may provide an API to program an entire cluster using implicit data parallelism and fault tolerance. Apache Spark is designed to make things easy, and fast, and supports all major forms of data processing workloads like batch processing, real-time streaming, machine learning, and graph processing.

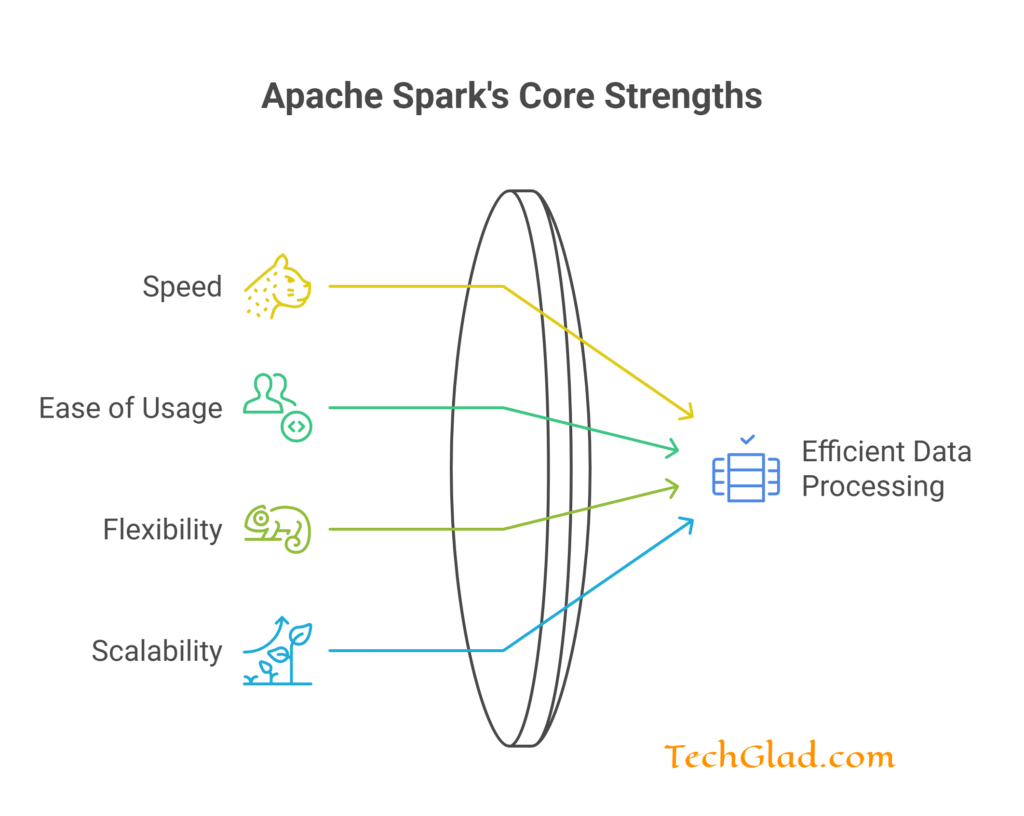

Main Features of Apache Spark

- Speed: Spark is known for its fast processing capabilities. It can process large datasets up to 100 times faster than traditional Hadoop MapReduce due to its in-memory processing. By keeping data in memory, Spark reduces the need for repeated reading and writing to disk, significantly speeding up data processing tasks.

- Ease of usage: It also has high-level APIs in multi-languages involving Python, Java, Scala, and R. That is, this gets accessed by different types of programmers based on their background experiences in the concerned languages. Now, PySpark is highly valued since it indeed empowers developers to let hands to developers themselves so that even the code it produces becomes readily accessible using traditional Python syntaxes.

- Flexibility: Spark supports all kinds of data processing tasks such as batch processing through Spark Core, real-time data processing through Spark Streaming, machine learning through MLlib, and graph processing through GraphX. This will enable users to work on multiple data science workflows under a single framework.

- Scalability: It supports scaling from a single server up to thousands of nodes in the cluster. There is an impressive distribution of both data and computations across many computers, which can be used quite effectively for large-scale data processing.

Getting started with Apache Spark

The step is pretty simple. You just get started with how to set up the environment for it and start understanding the core programming concepts associated with Spark. Here is your step-by-step guide to help you get off the ground and running.

- Install Apache Spark: Before starting to use Spark, you’ll need to download and install it on your local machine or cluster. You can grab a copy of Apache Spark from its official website and work through the installation instructions for your operating system. Alternatively, you can access Databricks to take advantage of the managed Spark environment there.

- Setup PySpark: If using Python, you will want to install PySpark, which is the API for Spark implemented in Python. You can find it in the Python package with pip:

- pip install pyspark

- Launch a Spark Session: Your entry point when working with Spark is the SparkSession. For PySpark you create a new SparkSession:

from pyspark.sql import SparkSession spark = SparkSession.builder \ .appName(“MySparkApp”) \

.getOrCreate()

- Load Data: Spark can read data from any source, whether it is CSV files, JSON files, Parquet files, or databases. Here’s how you can load a CSV file into a DataFrame in Spark:

df = spark.read.csv(“path/to/your/data.csv”, header=True, inferSchema=True)

- Explore and Transform Data: Once the data is loaded into a DataFrame, you can use Spark’s DataFrame API to explore and transform the data. Here’s an example of some common DataFrame operations:

# Show the first few rows of the DataFrame

df.show()

# Select specific columns

df.select(“column1”, “column2”).show()

# Filter rows based on a condition

df.filter(df[“column1”] > 10).show()

# Group by a column and perform aggregation

df.groupBy(“column2”).agg({“column1”: “mean”}).show()

- Perform Machine Learning: Spark’s MLlib library has many algorithms for classification, regression, clustering, and more machine learning tasks. For example, training a logistic regression model using MLlib is as follows:

from pyspark.ml.classification import LogisticRegression

from pyspark.ml.feature import VectorAssembler

# Assemble features into a feature vector

assembler = VectorAssembler(inputCols=[“feature1”, “feature2″], outputCol=”features”)

df = assembler.transform(df)

# Train a logistic regression model

lr = LogisticRegression(featuresCol=”features”, labelCol=”label”)

model = lr.fit(df)

# Make predictions

predictions = model.transform(df)

predictions.show()

- Save and Load Models: You can save trained models to disk and load them later for prediction. This is handy for deployment in production. To save and load a model in Spark, do the following:

# Save the model

model.save(“path/to/save/model”)

# Load the model

from pyspark.ml.classification import LogisticRegressionModel

loaded_model = LogisticRegressionModel.load(“path/to/save/model”)

Real-World Applications of Apache Spark in Data Science

Real-Time Analytics: The fact that it supports real-time data processing through Spark Streaming makes the system ideal for applications involving real-time analytics. This can be anything from tracking social media feeds, to fraud detection, and analysis of log data. In these scenarios, decisions can be taken and responses issued based on an event occurring because the data will have been processed in real time.

Example: A financial institution uses Spark Streaming to monitor transactions in real time and detect fraudulent activities. By analyzing patterns and anomalies in transaction data, they can flag and investigate suspicious transactions immediately.

Big Data Processing: Due to the high scalability of Spark, it is used in big data processing, thus allowing ETL workflows, data warehousing, and batch processing. This can process petabytes of data very efficiently, and therefore, it’s easy for organizations to derive insights from large datasets.

For instance, an e-commerce company uses Spark to process and analyze clickstream data from their website. Aggregation and analysis of such data help them understand customer behavior, optimize the user experience, and make data-driven marketing decisions.

Machine Learning at Scale: MLlib is Spark’s machine learning library, offering scalable algorithms for training models on large datasets. This makes Spark suitable for applications like recommendation systems, predictive analytics, and customer segmentation.

For example, a video streaming service could use Spark and MLlib to build a movie and television program recommendation system, recommending the appropriate movies and shows based on viewing history and personal preferences. That way, it would offer customized recommendations to engage users, thus enhancing their interactions with content.

Graph Processing: GraphX is the graph processing library of Spark which supports the analysis and manipulation of large-scale graphs. Therefore, this can be applied in applications such as social network analysis, fraud detection, and recommendation systems.

Example: A social media application uses GraphX to analyze the relationships and interactions between users. It can identify influential users and communities and thus improve content delivery, enhance user engagement, and optimize advertising strategies.

Conclusion

Apache Spark is a highly powerful and flexible tool for doing data science since it can process large datasets with a speed and efficiency that other tools cannot even dream of. High-level APIs, types of support in data processing tasks, and scalability make Apache Spark a necessary tool in the hands of a data scientist. Whether it is analytics in real-time, big data processing, machine learning, or graph processing, Spark provides all the tools and capabilities to help overcome complex data challenges.

By mastering the basics of Apache Spark and using all of its features, you will develop a new ability to unlock various opportunities and ensure success in data science projects. The more you experiment and delve into Spark, the more it will reveal all its potential for changing your work and organization. Happy Spark-ing!